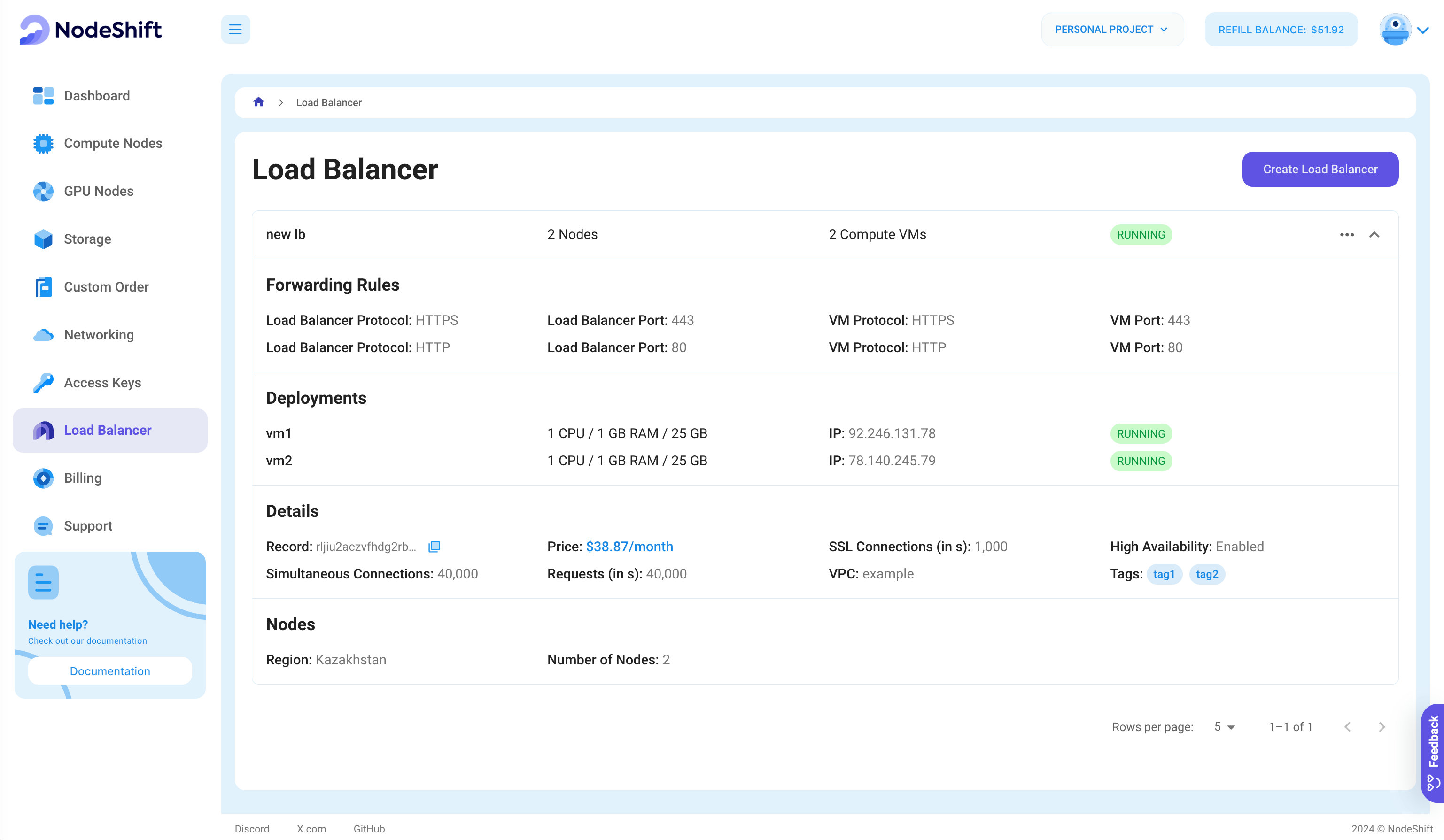

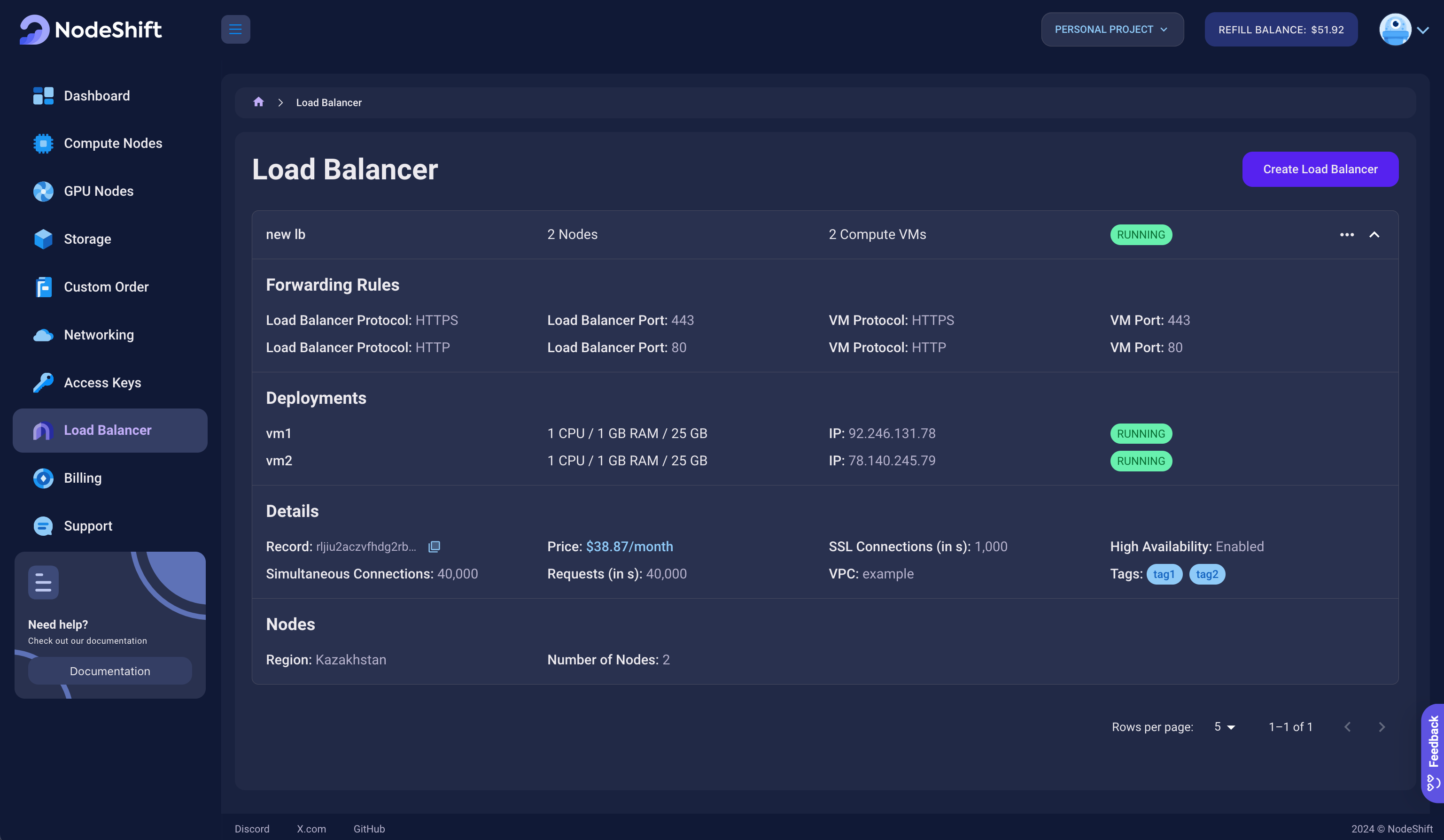

Load Balancer

A Load Balancer is a tool that helps distribute incoming network traffic across multiple CPU Nodes to ensure that no single CPU gets overwhelmed. By balancing the load, the Load Balancer improves availability, reliability, and scalability. If one CPU experiences high demand or fails, the Load Balancer reroutes traffic to other CPU Nodes, keeping the system responsive.

The Load Balancer requires at least two CPUs to function properly. The CPU Nodes will process the traffic, and the Load Balancer will evenly distribute requests between them, ensuring smooth performance. Additionally, you can connect one VPC and its CPU Nodes to it. This VPC will act as the network in which your CPU Nodes operate.

A Load Balancer is ideal when you have multiple servers or CPU Nodes handling user traffic, particularly during peak times when traffic spikes. It is also useful for fault tolerance, ensuring that if one CPU Node goes down, others can pick up the load without affecting your users.

Several parameters may be useful when configuring the Load Balancer. First, forwarding rules determine how traffic is routed to specific CPU Nodes. These rules can be based on different protocols, such as HTTP or HTTPS, and can direct traffic based on a specific port.

You can also choose to deploy the Load Balancer in different regions. This allows you to position the Load Balancer closer to the target audience to reduce latency. You can target specific CPU Nodes or VPCs in different regions, which allows to manage traffic from various parts of the world.

By connecting the Load Balancer to your CPU Nodes, you create a more resilient system that automatically distributes traffic, improves performance, and reduces the risk of downtime.